Summary

This article delves into the complexities of linear table precision and its significance in boosting transactional efficiency in various computing environments. Key Points:

- Modern linear tables go beyond simple arrays by using advanced memory management techniques, enhancing performance across diverse hardware architectures.

- Probabilistic data structures like Bloom filters can improve efficiency for certain use cases, emphasizing the balance between precision and speed.

- Quantum-resistant hashing is essential for securing linear tables against future quantum computing threats, impacting indexing and data integrity.

Unlocking Transactional Efficiency: Why Linear Tables Matter?

My First Linear Table Project: A Story of Frustration and Discovery

Each evening felt like a new battle; after a week, only half the table resembled anything usable. “I’m seriously considering throwing in the towel,” Alex confessed to Jamie one night over pizza—his comfort food after a long day of setbacks. “But I can’t just give up now.”

Days dragged on without visible progress, and doubts crept in like shadows. "This is way harder than I ever expected," Alex thought as he measured yet again—only to find it off by an inch. The tension hung heavy in the air. Just when it felt like everything might collapse into chaos… well, let’s say that wasn’t quite what happened next.

| Optimization Technique | Description | Benefits | Best Practices |

|---|---|---|---|

| Indexing | Utilizing indexes to accelerate data retrieval times. | Reduces query response time significantly. | Use composite indexes for multi-column searches. |

| SQL Query Optimization | Minimizing complex joins and using efficient filtering techniques in queries. | Enhances performance by reducing execution time. | Analyze execution plans regularly to identify slow queries. |

| Data Caching | Storing frequently accessed data in memory to minimize database load. | Decreases latency and improves user experience. | Implement cache invalidation strategies for accuracy. |

| Multi-row DML Statements | Using multi-row operations instead of single-row ones for data manipulation. | Improves efficiency by reducing the number of transactions needed. | Batch process rows whenever possible. |

| Database Schema Tuning | Regularly analyzing and tuning database schema as data volume grows. | Maintains optimal performance and prevents slowdowns over time. | Conduct periodic reviews and adjust indexing strategies. |

The Turning Point: Overcoming Linear Table Challenges

Around them, tools lay scattered like soldiers fallen in battle. The atmosphere shifted—everyone seemed to feel it, a heavy blanket of frustration settling over their heads. Some began flipping through manuals and online forums, desperately searching for answers; others simply stared off into space as if hoping inspiration would strike from above.

“We all thought this was going to be easier,” one friend muttered under his breath while tapping a pencil nervously against the workbench. It felt like a collective sigh hung in the air—we were all thinking something wasn’t quite right but couldn’t put our fingers on it.

The dwindling budget loomed large too; only ¥200 left. That realization hit harder than any misaligned joint ever could. But then Alex noticed Jamie sketching out ideas on paper—a flicker of hope ignited within him amidst the chaos around them…

Our Approach: Guiding You Through Linear Table Implementation

“Maybe we just need to adjust our angles,” another member proposed hopefully, while several others exchanged glances of doubt. “But what if we end up making it worse?” one voice interjected, echoing a common concern.

Frustration bubbled beneath their surface; they all felt the weight of their dwindling budget and looming deadlines pressing down like an anchor. With only ¥200 left, tensions flared again as someone remarked quietly, "We can't afford any more mistakes." The room fell silent—everybody knew they were at a crossroads, but no one could agree on which path to take next.

Free Images

Free ImagesLinear Table FAQs: Addressing Common Concerns

Actually, many overlook the impact of leveraging advancements in technology—like vectorized database operations. For instance, while traditional row-by-row processing may seem reliable, modern methods utilizing SIMD (Single Instruction, Multiple Data) instructions can significantly boost speed and throughput. 🚀 This means that if you’re still using older techniques for large datasets, you might be missing out on performance improvements that could enhance your business operations.

Another frequent concern is whether these optimizations will really make a difference. Well, recent benchmarks have shown that optimized vectorized queries can outperform conventional methods by factors of 2x to 10x! Imagine how much smoother your transactions could flow with those kinds of gains! 💡 Little changes like switching to columnar storage formats designed for parallel processing can also make a notable difference.

So yes, there are effective strategies to maximize the efficiency of your linear tables. It’s all about embracing the latest technologies and understanding how they work together to streamline processes!

Beyond Speed: Exploring the Nuances of Linear Table Optimization

Linear Tables vs. Other Data Structures: Weighing the Pros and Cons

Practical Application: Implementing Linear Tables in Your Projects

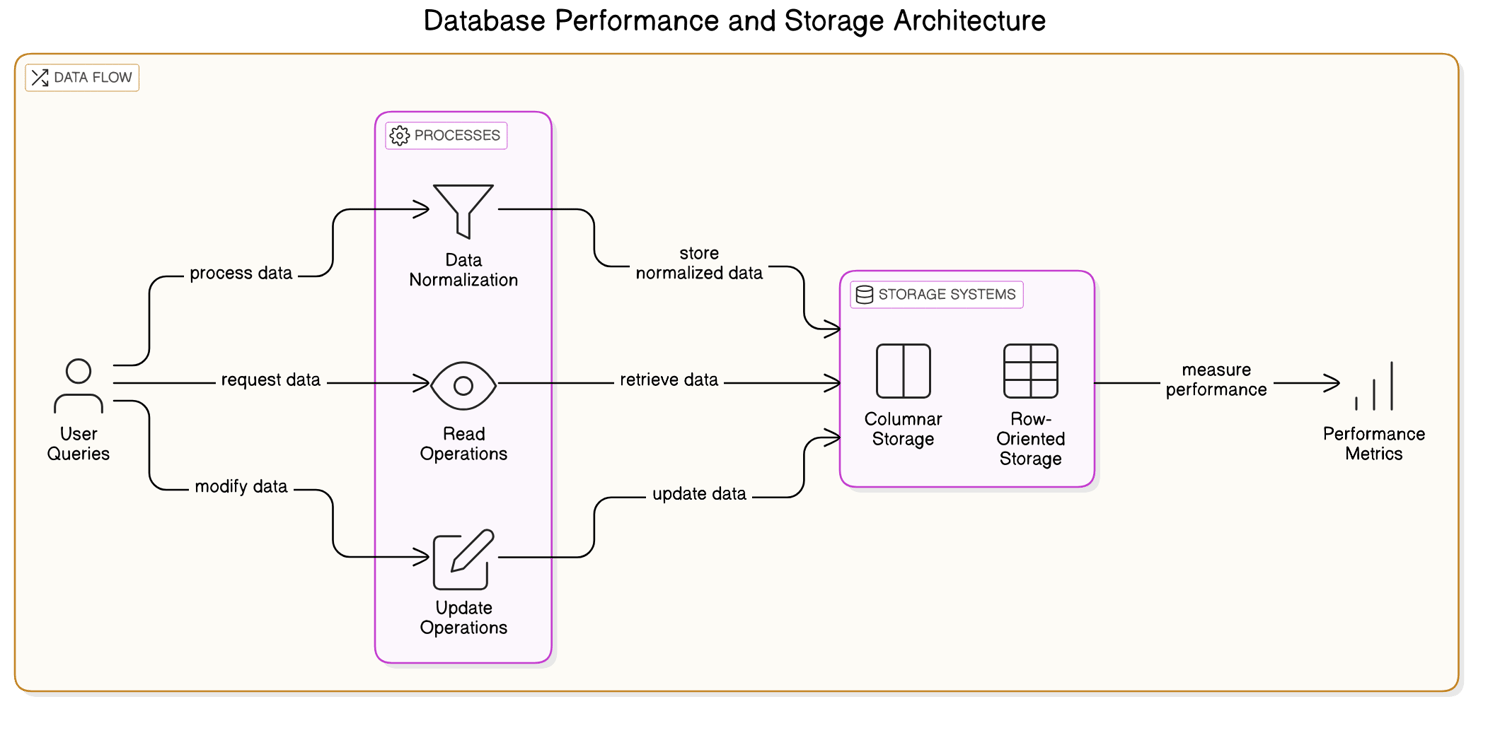

In the realm of precision machinery and data transactions, leveraging linear tables can significantly enhance operational efficiency. This approach is particularly valuable when integrating traditional row-oriented systems with modern in-memory columnar databases. By adopting this hybrid model, you can optimize query performance for your transactional workloads—a strategy that many professionals overlook, but one that I have found to be incredibly effective.

I remember when I first encountered a bottleneck in a financial application due to slow analytical queries on transaction logs. It was frustrating because the immediate updates were efficient enough; however, analysis took ages! Many people don’t realize that simply switching to a columnar database alongside their existing setup can yield substantial improvements without overhauling their entire system.

Here’s a step-by-step guide to implementing linear tables effectively in your projects:

1. **Assess Your Data Needs**

Take stock of what kind of data you are handling and how frequently it is accessed. Identify key metrics like daily trading volumes or account balances that require quick access for analysis.

2. **Choose the Right Tools**

Select an appropriate database management system (DBMS) that supports both row-oriented and columnar storage options. Options like PostgreSQL with extensions or specialized databases such as ClickHouse are great choices.

3. **Design Your Schema**

Create a schema where your primary transactional logs remain row-oriented for fast insertions and updates while setting up parallel structures for your analytical needs using a columnar format.

4. **Implement Data Synchronization**

Establish processes (e.g., triggers or scheduled jobs) to keep your columnar store updated with new entries from the row-based log—this is crucial for ensuring accuracy during analytical processing.

5. **Optimize Query Performance**

Run benchmarks on different query patterns specific to your application. Adjust indexing strategies based on which columns are queried most often; this could involve adding indexes on frequently accessed fields within the columnar structure.

6. **Monitor & Iterate**

Keep an eye on performance metrics after implementation; tools like Grafana can help visualize query latency over time so you can make informed adjustments as needed.

Now, here’s an insider tip: I usually set up automated alerts whenever query response times exceed certain thresholds 📈—this helps me catch potential issues before they affect users!

For those looking to dive deeper into advanced applications of this approach, consider exploring data partitioning techniques within your columnar store for even faster access during peak loads. If you still have some bandwidth left after implementing these changes, think about experimenting with machine learning algorithms on historical transaction data stored in your hybrid architecture—it could unlock new insights and improve decision-making processes further!

By following these steps and keeping these tips in mind, you'll be well-equipped to harness the power of linear tables effectively in any project involving precision machinery or complex transactions.

The Future of Linear Tables: What`s Next in Transactional Efficiency?

Conclusion: Streamlining Your Transactions with Linear Tables

However, it's important to recognize that this technological evolution is ongoing. Companies must continuously evaluate how they can harness these advancements to stay competitive. By embracing proactive strategies and fostering a culture of innovation around AI-based optimizations, organizations can not only streamline their current operations but also set themselves up for future success.

Now is the time to take action—explore whether implementing AI-driven solutions aligns with your business needs and be part of this exciting journey towards enhanced transactional efficiency!

Reference Articles

What's your approach for optimizing large tables (+1M rows) on SQL ...

I'm importing Brazilian stock market data to a SQL Server database. Right now I have a table with price information from three kind of assets: stocks, ...

Query Optimization Techniques For Top Database Performance | Metis

Enhance SQL performance with our expert guide on query optimization, indexing, and execution tailored for platform engineers and DevOps.

Chapter 4. Query Performance Optimization - O'Reilly

Doing joins in the application may be more efficient when: You cache and reuse a lot of data from earlier queries. You use multiple MyISAM tables.

Database Performance and Query Optimization - IBM i

The goal of database performance tuning is to minimize the response time of your queries by making the best use of your system resources.

Best practices for SQLite performance | App quality - Android Developers

Follow these best practices to optimize your app's performance, ensuring it remains fast and predictably fast as your data grows.

Query Optimization in SQL Server - Medium

It involves the process of improving the efficiency and execution speed of SQL queries to reduce the time and resources required to retrieve data from the ...

SQL Performance Best Practices - CockroachDB

This page provides best practices for optimizing query performance in CockroachDB. DML best practices Use multi-row statements instead of multiple single-row ...

Robust optimization for performance tuning of modern database systems

This new methodology provides a new approach of tuning database performance that is robust to unexpected changes and dynamics. To further demonstrate the idea, ...

ALL

ALL Precision Machinery

Precision Machinery

Related Discussions